Larry Wall once said that the three great virtues of a programmer are laziness, impatience, and hubris. This post is about laziness.

I've been working on speeding up an ETL process that takes about 48 hours to run. The ETL consists of about three dozen individual tasks; some can be run in parallel while others have interdependencies. Like all good background processes, it generates a log file, which looks something like this (task names have been changed to protect the innocent):

$ cat etl.log

...

2015-04-22 06:11:55 [INFO ] [Mary] Transform starting.

2015-04-22 06:11:55 [INFO ] [Miriam] Transform starting.

2015-04-22 06:12:12 [INFO ] [Miriam] Transforming data completed. Value tests starting.

2015-04-22 06:12:14 [INFO ] [Miriam] Value tests done. All tests passed.

2015-04-22 06:12:14 [INFO ] [Miriam] Transform completed with warnings in 19.38 s.

2015-04-22 07:35:38 [INFO ] [Mabel] Transform starting.

2015-04-22 12:50:31 [INFO ] [Mabel] Transforming data completed. Value tests starting.

2015-04-22 12:50:48 [INFO ] [Mabel] Value tests done. All tests passed.

2015-04-22 12:50:48 [INFO ] [Mabel] Transform completed with warnings in 18,909.7 s (315.16 min).

2015-04-23 03:03:12 [INFO ] [Mary] Transforming data completed. Value tests starting.

2015-04-23 04:11:43 [INFO ] [Mary] Value tests done. All tests passed.

2015-04-23 04:11:43 [INFO ] [Mary] Transform completed with warnings in 79,188.79 s (1,319.81 min).

...

One simple thing we can do is figure out which parts of the process are making it slow. Since each task reports how long it takes to complete, this just requires some grep and regex magic on the log file:

$ grep "\d s" etl.log | ruby -pe "gsub(/^[\d\s:-]+ \[.{5}\] \[(.+?)\] .+ ([\d,\.]+ s).+/, '\1: \2')"

...

Miriam: 19.38 s

Mabel: 18,909.7 s

Mary: 79,188.79 s

...

This narrows things down a lot. Like Miriam, most of the tasks are quick. There's no point in optimizing a 19-second task when others take many hours to run.

There's one catch, though: because tasks can run in parallel, the fact that a particular task is slow doesn't mean that it extends the overall process. Suppose that Mabel and Mary both start at midnight; they'll finish at 6 am and 10 pm, respectively. Even if we can make Mabel six times faster so that it finishes at 1 am, Mary (and thus the entire ETL) will still run until 10 pm.

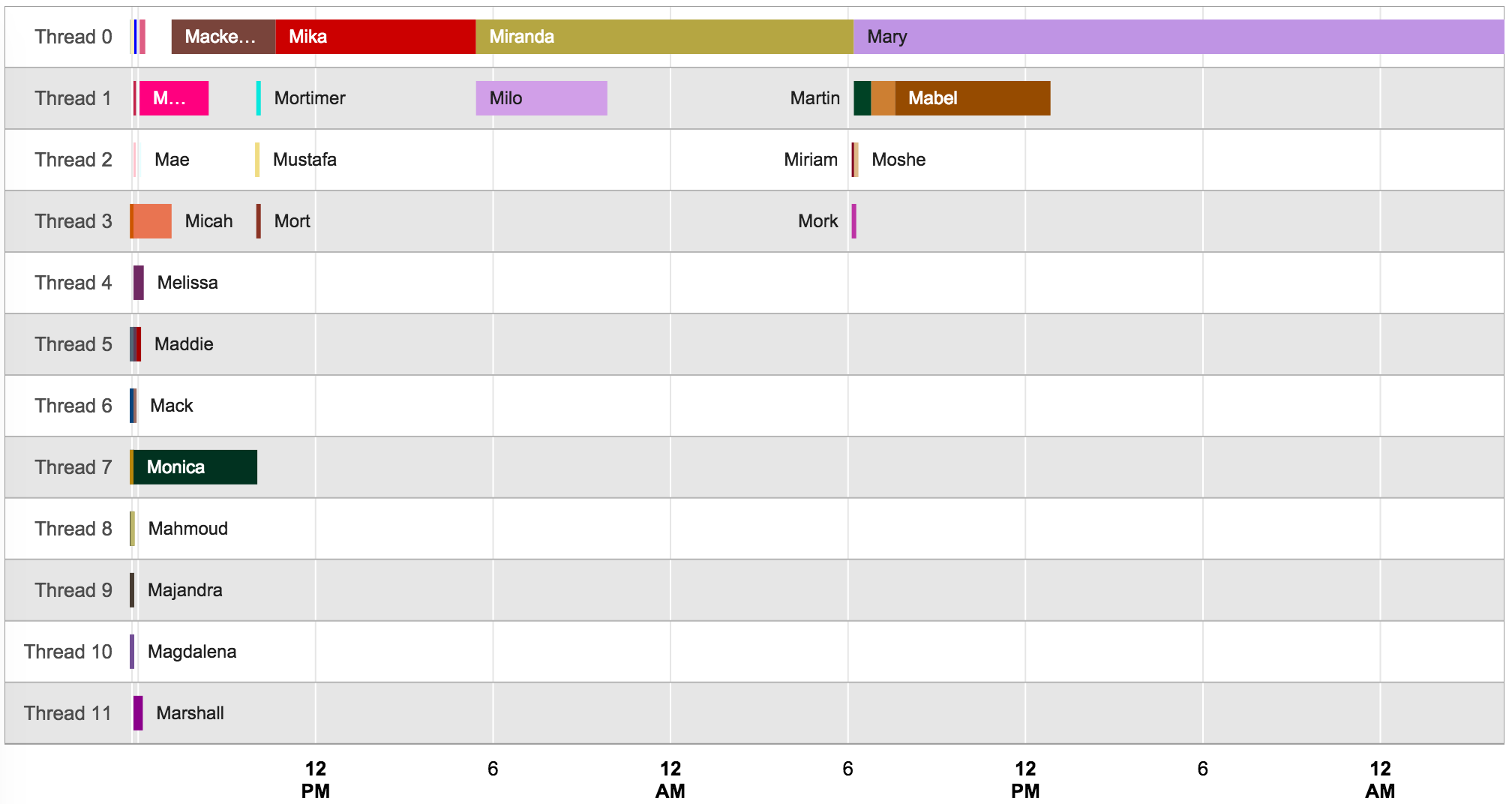

In order to see which tasks are in the critical path, it would be helpful to see them on a timeline. The algorithm for building a timeline looks something like this:

- Read the log file

- Add each task to a data structure; store the name, start time, and end time

- Create an array representing the worker threads

- Figure out which thread ran each task (this would be easier if the thread number were in the log file)

- Draw a timeline with a swimlane for each thread

Exactly how I did this isn't terribly important (you can see the code in this gist if you're so inclined); suffice it to say that I whipped up a short ruby script for the first four steps and used the timeline widget from Google's Charts library for the fifth.

Here's the result:

As I hinted at above, Mabel isn't the problem. The tasks that I ought to work on -- the long-running ones in the critical path -- are Mika, Miranda, and Mary. Also apparent from the timeline is that launching twelve threads isn't worthwhile. Certainly four would be just as good (0, 1, 3, and 7 in the chart), and perhaps three or even two would be sufficient.

So, what does this post have to do with laziness? Here's Larry Wall's definition:

"The quality that makes you go to great effort to reduce overall energy expenditure. It makes you write labor-saving programs that other people will find useful, and document what you wrote so you don't have to answer so many questions about it. Hence, the first great virtue of a programmer."

I could have -- and I have -- scrolled through the log files manually, eyeballing the timestamps and trying to identify the tasks that I should focus on. Now, having taken the time to write this little charting script once, I can chip away at those critical-path tasks and see at a glance if the overall runtime is getting better.